Gerald Nestler 2015

RESOLUTIONIZATIONS. self-organized

A-Symmetry -

Algorithmic Finance and the Dark Side of the Efficient Market

Financial market actors manage their risks through what are seen as efficient trading schemas, often while creating new inefficiencies and systemic risks in turn. Starting from the Flash Crash of 2010, artist and theorist Gerald Nestler investigates the problem of information asymmetries in high-frequency trading, demonstrating the need for a new aesthetics of resolution.

INTRODUCTION.

CAPITULATION AUTOMATIQUE. RESOLUTION AND DISSOLUTION BEYOND VISIBILITY

99 per cent of finance doesn’t know how the stock market works. —Haim Bodek

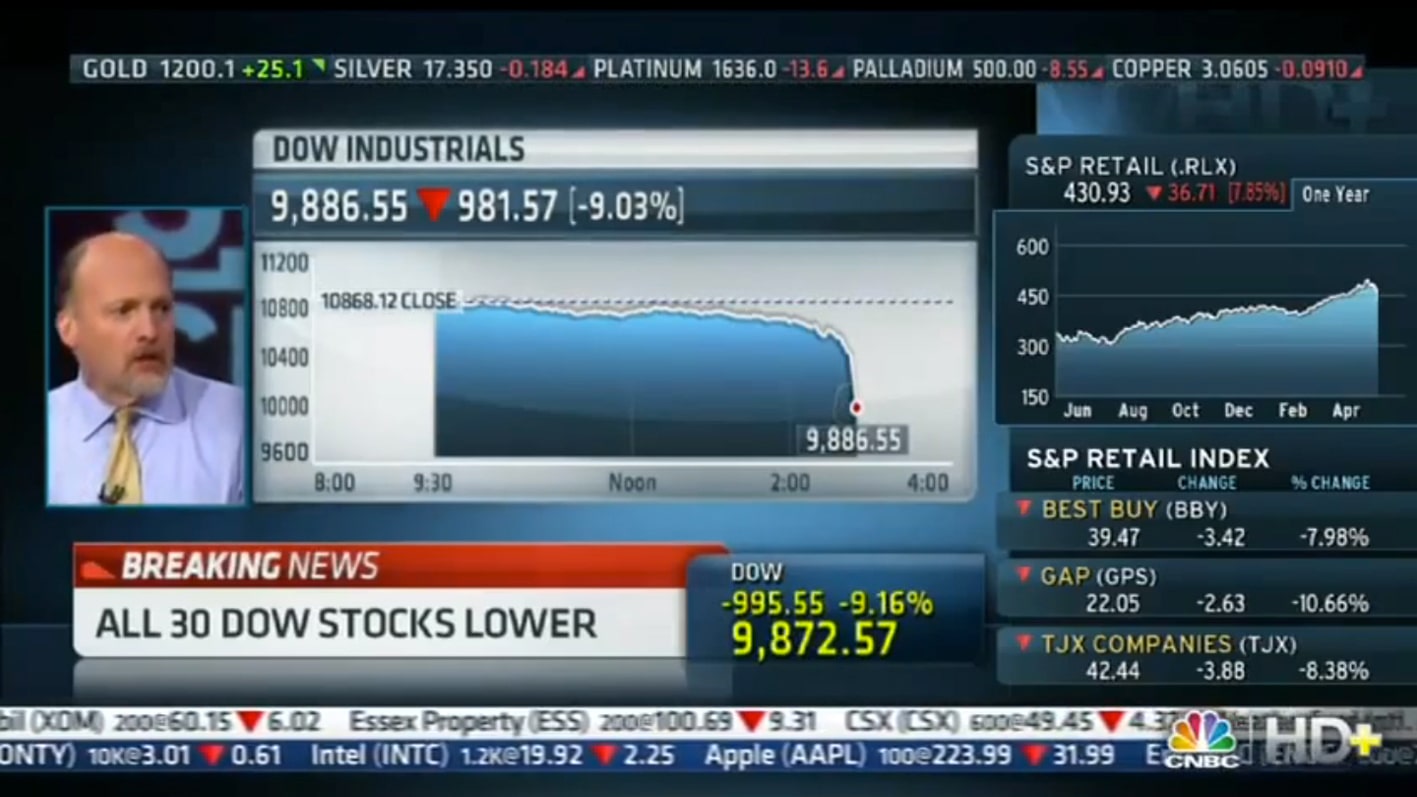

On May 6, 2010, bots played havoc among financial market centers causing mayhem in less than five minutes. The Flash Crash, as it has become known, went viral as the biggest one-day decline in the history of financial markets. During the rapid slump, the Dow Jones Industrial Average plunged by about 1,000 points – nine per cent of its total value – only to recover most of its losses in the next twenty minutes. CNBC Live, initially covering the political stalemate of the Greek austerity crisis and the ensuing protests in the streets of Athens, shifted immediately to the trading floor of the New York stock exchange: “What the heck is going on down there? […] I don’t know […] this is fear, this is capitulation.”Quoted from (https://www.youtube.com/watch?v=Bnc9jR2WDgo).

The Flash Crash constitutes a watershed event in financial markets. Algorithmic trading had taken center-stage. Human traders lost their bearings in the event and a live broadcast for professional traders commented: “This will blow people out in a big way like you won’t believe.”Ben Lichtenstein, the “voice of the CME S&P futures pit,” Traders Audio, “May 6, 2010 Stock Market Crash,” May 12, 2010 The original source is no longer available online but the quote can be found here: Gerald Nestler, COUNTERING CAPITULATION. From Automated Participation to Renegade Solidarity, single channel video, 11:20 min., 2013-14 [from 10:36] (https://vimeo.com/channels/aor). Technically, capitulation means panic selling due to pessimism and resignation. But apart from financial losses, the term “capitulation” implies a liquidation of visibility in the sense of unmediated human perception and collective resolution. Hence, the broadcast highlighted the relevance of political economy today. What, one may ask, informs such potent noise without leaving much of a trace? And how does it affect us?

The debate that ensued in the aftermath of this ferocious event pitted for the first time in financial market history those who upheld the generally accepted opinion, which blames human error, against a dissenting opinion, which held algorithmic trading and automation accountable. As collateral, high-frequency trading (HFT) came to public attention. Journalists and bloggers picked up on the topic and eventually it appeared as if the financial markets were at the mercy of smart quants and developers who tuned hardware, tweaked infrastructure, and coded algorithms to drive these automated speculations.To list all the blog entries, articles, and publications on this topic would go beyond the scope of this essay (the Wikipedia entry “2010 Flash Crash” lists some of the more noted contributions), I only mention two books, which became bestsellers, Scott Patterson, Dark Pools: The Rise of A. I. Trading Machines and the Looming Threat to Wall Street. New York: Crown Publishing Group, 2012; and Michael Lewis, Flash Boys. New York: Norton & Company, 2014. The HFT expert and whistleblower Haim Bodek speaks of “avalanching” to describe the real-time violence with which HFT orders impact markets – a massive melt down that would dwarf the Flash Crash is an event with a probability far above zero.

large

align-left

align-right

delete

HFT achieved unparalleled market shares (up to 80 percent of US stock transactions in 2012) due to the high trading volume necessary to deliver profits (see below). However, the impact of HFT as the characteristic feature of contemporary finance is often exaggerated. Revenues are relatively small when compared to the total market.Revenues in the sector were at 7.2 billion US dollars in 2009 but diminished to 1.3 billion in 2014. More recently, HFT market share has dropped due to hypercompetition and the infrastructure costs that accrue in the effort to stay competitive in markets with diminishing bid-ask spreads (a result of HFT competition).See, for example the Deutsche Bank Report High Frequency Trading: Reaching its limits (https://www.dbresearch.com/PROD/DBR_INTERNET_EN-PROD/PROD0000000000406105/High-frequency_trading%3A_Reaching_the_limits.pdf). However, automation and algorithms have, apart from exploiting “predictive structures,”Donald MacKenzie, “A material political economy: Automated Trading Desk and price prediction in high-frequency trading,” Social Studies of Science (article first published online: December 6, 2016

DOI: https://doi.org/10.1177/0306312716676900), p. 6. ushered in a new trading paradigm: the direct interception into the pricing mechanisms relying on speed (or, more technically, low latency).

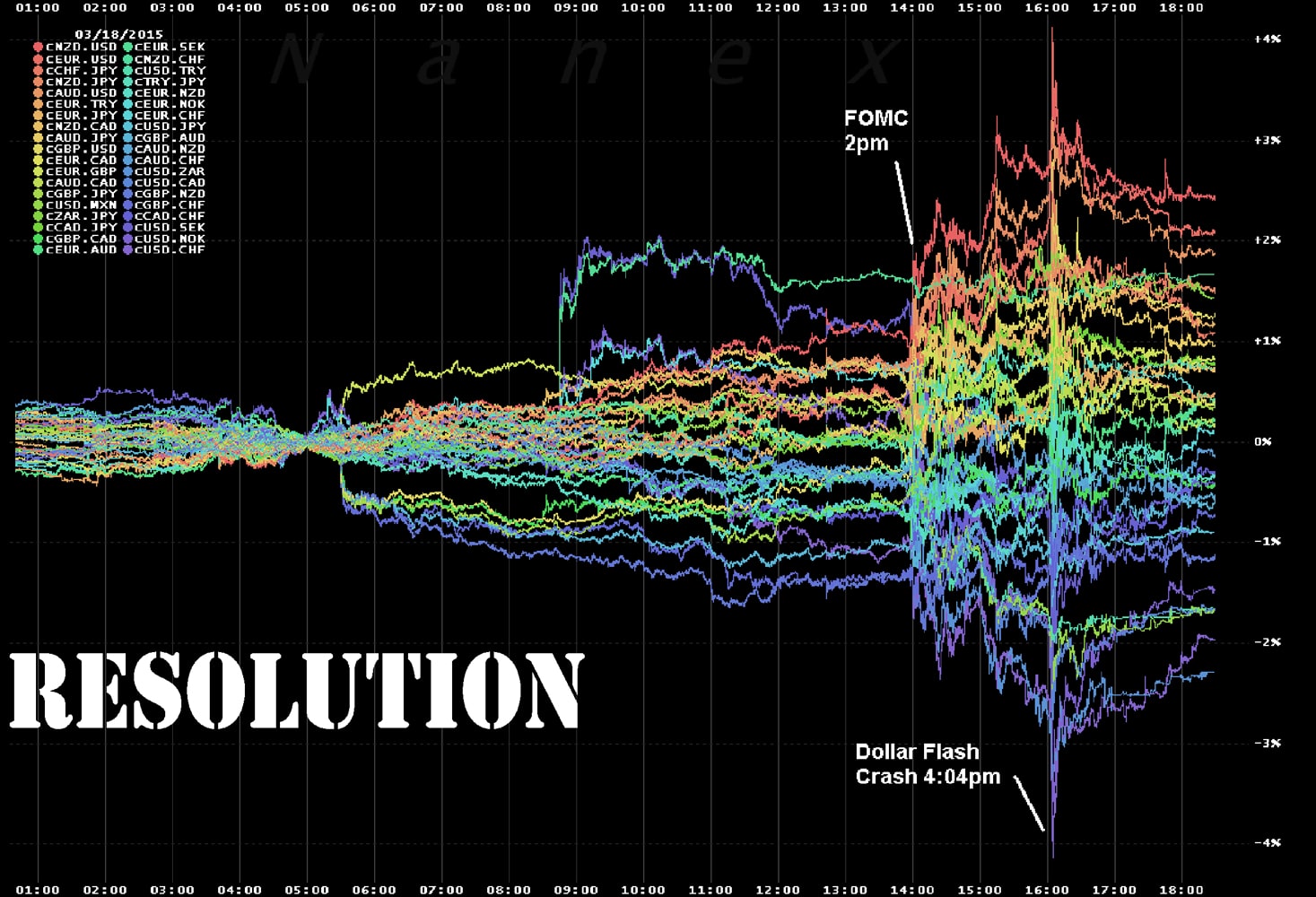

In order to examine the consequences of HFT I resort to the term “resolution” and its relation to immediacy as visibility. What are the most consequential implications of quantitative trading in a hypercompetitive environment? The diverging interpretations of the Flash Crash 2010 can help us to delineate the issue of HFT and its regulation as a starting point to tackle some of the artificial unknowns that shape this practice today. My interest is not in resolving which of the two positions holds true – both the official investigation by the joint SEC/CFTCThe Securities and Exchange Commission (SEC) and the Commodity Futures Trading Commission (CFTC) report and the analysis by the financial data provider Nanex are controversial and received comprehensible criticism. Instead, I will focus on the “visibility conditions” (how they relate to immediacy) and the resolution philosophy that informs them.

THE FLASH CRASH. RESOLUTION IN MICROTIME

A distributed system is one in which the failure of a computer you didn’t even know existed can render your own computer unusable. —Leslie LamportLeslie Lamport in: My Writings Distribution 75. Email message sent to a DEC SRC bulletin board at 12:23:29 PDT, May 28, 1987 http://lamport.azurewebsites.net/pubs/distributed-system.txt

The investigation of the Flash Crash resulted in a joint official report by the US-regulatory authorities, the SEC and the CFTC.“Findings Regarding the Market Events of May 6, 2010: Reports of the staffs of the CFTC and SEC to the joint advisory committee on emerging regulatory issues,” September 30, 2010 (http://www.sec.gov/news/studies/2010/marketevents-report.pdf). It was published a few months after the incident and put the blame on human trading. A contrasting analysis of the event conducted by a small financial data provider claimed that the crash was in fact caused by orders executed automatically by algorithms. Nanex LLC,Nanex is a market research firm that supplies real-time data feeds of trades and quotes for US stock, option and futures exchanges. Their website states, “[W]e have archived this data since 2004 and have created and used numerous tools to help us sift through the enormous dataset: approximately 2.5 trillion quotes and trades as of June 2010.” a financial service provider, records trading data and was therefore in the position to examine the event on their own account.

The SEC and CFTC based their official report on the material made available by exchanges and market participants, which usually has a resolution of one-minute trading intervals. This dataset would have been adequate to scrutinize trading activities before the ascent of HFT. But today, “in the blink of an eye, the market moves what used to take humans thirty minutes.”Transcript of Adam Taggart, “Eric Hunsader: Investors Need to Realize the Machines Have Taken Over. The blink of an eye is a lifetime for HFT algos,” Peak Prosperity, October 6, 2012 (http://www.peakprosperity.com/podcast/79804/nanex-investors-realize-machines-taken-over). The quote by the founder of Nanex illustrates the sheer pointlessness of scrutinizing market activity above the transaction frequency of the fastest traders. Their professional experience with market data allowed Nanex to intuitively escape the trap of the one-minute resolution, which in the case of HFT conceals more than it reveals.Another example is the crash of Knight Capital in 2010. Nanex, who analyzed the incident, remark: “If we zoom in and look at what happens under one second, then a clear pattern emerges. We think it’s important to note that the SEC claimed there is no value to be gained from looking at data in time resolutions under a second ‘because it is just noise’. We strongly disagree.” (http://www.nanex.net/aqck2/3522.html). They realized that conventional market data records did not show any material traces of what might have initiated the rupture, which tore the intricate fabric of market prices. They decided to delve deeper into the “abyss” of micro-time and look at fractions of a second. Step-by-step, they enhanced the temporal resolution and eventually, at a dizzying depth of time, the material traces of the Flash Crash came into view.

When Nanex made a strike of market activity far below the threshold of perception, what they ‘”saw” at first glance looked like a glitch. But what emerged from the forensic analysis were the imprints of an elaborate scheme. They had encountered information in a realm that was deemed to only emit noise if anything.Noise as opposed to signal is the term for random information in information theory. As financial markets are constructed as information markets (both in the Hayekian sense of the price regime and cybernetics), noise is a constituent element of trading. Following Fischer Black we can define it as the ubiquitous other of information: “Noise makes financial markets possible, but also makes them imperfect,” in Fischer Black, “Noise,” Journal of Finance, vol. 41, no. 3 (1986), pp. 529‒43, here p. 530. As the founder and CEO of Nanex, Eric Hunsader, stated: “The SEC/CFTC analysts clearly didn’t have the dataset to do it in the first place. One-minute snapshot data, you can’t tell what happened inside of that minute. We didn’t really see the relationship between the trades and the quote rates until we went under a second.”U.S. ‘flash crash’ report ignores research ‒ Nanex,” Sify Finance, October 5, 2010 (http://www.sify.com/finance/u-s-flash-crash-report-ignores-research-nanex-news-insurance-kkfiEjeciij.html).

ZeroHedge was one of the first finance blogs to report on Nanex’s Flash Crash analysis and embedded an interactive map of their findings, see (http://www.zerohedge.com/article/most-detailed-forensic-analysis-flash-crash-date-and-likely-ever). Their final statement was unambiguous: “High Frequency Trading caused the Flash Crash. Of this, we are sure.”See Nanex ~ 26-Mar-2013 ~ Flash Crash Mystery Solved (http://www.nanex.net/aqck2/4150.html). Even though Nanex found evidence of trades they could not provide evidence on the perpetrator because the law protects proprietary data and its source.

Audio placeholder Click to add an audio file

H

Excerpt from COUNTERING CAPITULATION. From Automated Participation to Renegade Solidarity, single channel video, 2013-14.

small

align-left

align-right

delete

I won’t address the truth claims of Nanex’s and the official reports. My main concern is the discrepancy between the material traces and their consequences. I will therefore focus on Nanex as one provider of a set of forensic resolutionsThe author has developed an aesthetic concept on the term “resolution” which due to space cannot be presented here. For those interested, this link provides more information: https://researchcultures.com/issues/1/towards-a-poietics-of-resolution.html. on the material data of the Flash Crash. It raises the question of how resolution techniques operate as regards visualization (making tools that enhance perception and render material evidence), evaluative measuring (computation / calculation of sequences and relations) and knowledge-production (analysis / interpretation of material). Even though such high-resolution telescopes offer glimpses into financial microtime, attribution, and solution – the decisive elements of the semantic field of the term resolution – are beyond the capacity of Nanex or any third party (both market participants and the general public). The question as regards the actuator(s) of the Flash Crash has as yet not been fully resolved.This essay is also not concerned with the recent exposure of the ostensible culprit. According to a news report, “Mr Sarao’s spoofing netted him a profit of $40m (£28m), according to the US,” the Independent, February 5, 2016 (http://www.independent.co.uk/news/business/news/navinder-singh-sarao-british-flash-crash-trader-broke-no-laws-says-lawyer-a6856791.html).

REPERFORMATIVE FORENSICS IN A HYPERCOMPETITIVE ENVIRONMENT

All consciousness is a matter of threshold. —Gilles Deleuze Gilles Deleuze, The Fold: Leibniz and the Baroque, trans. Tom Conley (Minneapolis: University of Minnesota Press, 1993), p. 64.

An investigation into the complexity of market interplay is not only confronted with one or several black boxes but with the meta-black box of the market per se, which encompasses the entire system in its complexity, including but not restricted to brokers, traders (in the broadest term), and market centers. As the former HFT trader David Lauer remarks:

The markets and the interplay in the industry between all these firms with all these very complicated and complex technology systems and how they interact makes the entire system of exchanges, high-frequency, brokers and the interaction between the technology, it makes it a complex system. […] There is no cause and effect that you can point to. What caused the Flash Crash is a nonsense question. […] And, if you were to replay the same sequence of events, identically, there’s no guarantee that it will cause a Flash Crash again. That’s the nature of complex systems.David Lauer in Marijke Meerman (dir), The Wall Street Code, documentary, 51 min (http://www.youtube.com/watch?v=kFQJNeQDDHA, at 46:00–46:48).

Nanex, after failing to attribute motive and blame, amended their strategy towards an investigation that mixed forensic analysis with “witness review” by information disclosure. They asked the party blamed (though not identified) by the official report, the mutual fund Waddell & Reed, to grant access to their trading data. In accordance with the capitalist proprietary regime, it is most plausible that the fund would have declined if they had not been blamed. But by that time, Waddell & Reed had a vested interest in clearing their name. The incorporation of the proprietary source code allowed Nanex to classify the data of a specific address. Their analysis relied on an apparatus that pairs four quantitative frameworks in an effort to deliver sufficient approximation to the trading operations: Nanex’s extensive archive of financial data; quantitative analysis; custom-made, adaptive resolution devices that powered the investigation of the data sets; and the algorithmic trading data from the financial black box (the mutual fund and the executing algorithm of their broker Barclay Capital Inc.).See, for example Herbert Lash, “CFTC, Barclays discussed Waddell algorithm-source,” Reuters, October 22, 2010 (http://www.reuters.com/article/flashcrash-barclays-idUSN2219178020101022). This framework led Nanex to deviate from the official narrative. Even if their interpretation is controversial (algo traders, for instance, hailed the official report), it helped bring the cybernetic regime of HFT to light.

A paper presented in 2014 co-authored by members of the original investigation team of the SEC and CFTC report maintains the official narrative (2010) that “HFT did not cause the Flash Crash, but contributed to extraordinary market volatility experienced on May 6, 2010. […] high frequency trading contributes to flash-crash-type events by exploiting short-lived imbalances in market conditions.”Andrei Kirlinko et al., The Flash Crash: The Impact of High Frequency Trading on an Electronic Market (2014), p. 2, see (http://www.cftc.gov/idc/groups/public/@economicanalysis/documents/file/oce_flashcrash0314.pdf). The authors detect immediacy as problematic, as it is exacerbated by HFT to its own benefit.

Because advanced trading technology can be deployed with little alteration across many automated markets, the cost of providing intermediation services per market has fallen drastically. As a result, the supply of immediacy provided by the HFTs has skyrocketed. At the same time, the benefits of immediacy accrue disproportionally to those who possess the technology to take advantage of it. As a result, HFTs have also become the main beneficiaries of immediacy, using it not only to lower their adverse selection costs, but also to take advantage of the customers who dislike adverse selection, but do not have the technology to be able to trade as quickly as they would like to. These market participants express their demands for immediacy in their trading orders, but are too slow to execute these orders compared to the HFTs. Consequently, HFTs can both increase their demand for immediacy and decrease their supply of immediacy just ahead of any slower immediacy-seeking customer.Kirilenko et al.,The Flash Crash, p. 3.

Immediacy, I would argue, defines visibility as a performative issue. In relation to market activity – especially in times of high volatility but not restricted to these moments – immediacy equals visibility, or, more accurately, immediacy is technological visibility constructed by resolution techniques. Hence, developers in the algorithmic trading space increase obscurity within the entire playing field by narrowing, if not modifying, the field of visibility (of the order book, to be precise). This instance is the most current in a row of performative revolutions in finance that started with the displacement of (human) floor traders by quants (the framing of finance by Black Scholes Merton (BSM); the quantification of the volatility curve in the pricing regime) and goes hand in hand with shifts between cost centers and profit centers.I owe this assessment to the HFT expert and whistleblower Haim Bodek with whom I am currently working on the cartography of algorithmic trading.

When we accept immediacy as a form of visibility in micro-time – engineered by resolution techniques that both enhance the visual field and act upon it (and obscuring it for those lacking the means) – we can adopt Michel Callon and Donald MacKenzie’s remarks on performativity. While MacKenzie focuses on a different realm of financial market activity – precisely derivatives trading and the BSM model, and hence the profit model of finance that was challenged by HFT and other low latency trading applications – Callon offers a wider interpretation of performativity: “My thesis is that both the natural and life sciences, along with the social sciences, contribute towards enacting the realities that they describe.”Michel Callon, What does it mean to say that economics is performative?, CSI Working Papers Series no. 005, 2006, p. 7. The black box as a scientific apparatus has conquered finance and constructs its reality in a similar way as economic theories acted on it. When we think of all the flash crashes that keep recurring or the “avalanching” Bodek mentions, the term “counterperformativity”MacKenzie, in An Engine, not a Camera: “The strong, Barnesian [a term he uses to distinguish his sociological from Austin’s linguistic approach] sense of ‘performativity,’ in which the use of a model (or some other aspect of economics) makes it ‘more true,’ raises the possibility of its converse: that the effect of the practical use of a theory or model may be to alter economic processes so that they conform less well to the theory or model. Let me call this possibility – which is not explicit in Callon’s work – ‘counterperformativity.’” See Donald MacKenzie, An Engine, not a Camera. Cambridge, MA: MIT Press, p. 19. seems to highlight this fact even more robustly:

Whereas the notion of a self-fulfilling prophecy explains success or failure in terms of beliefs only, that of performativity goes beyond human minds and deploys all the materialities comprising the socio-technical agencements that constitute the world in which these agents are plunged: performativity leaves open the possibility of events that might refute, or even happen independently of, what humans believe or think. MacKenzie proposes the notion of counter-performativity to denote these failures, because in this case the [Black Scholes Merton] formula produces behaviors that eventually undermine it.Callon, What does it mean to say that economics is performative?, p. 17.

Due to the complexity described by Lauer, the market cannot simply be “captured” in all its immediacy and “replayed” like a film.In a philosophical discussion of this thought, Jon Roffe emphasizes the “eventual character of price […], for, strictly speaking, no price can ever be repeated. This is because any given price is recorded on a surface and in this way changes it. To repeat the same price – where price is now grasped at the moment of its advent – can never have the same effect on the market surface itself.” See Roffe, Abstract Market Theory. Basingstoke: Palgrave Macmillan, 2015, p. 71. The vision-enhancing sensors that detect time-blurred traces and mark discriminations in a complex environment deliver information from noise, which has to be unearthed and then resolved in a separate stage. Thus, a forensic analysis is neither fully embodied nor defined by the abstract representations of data traffic. Rather, the analysis is situated, i.e. constructed, in between the juncture of performance as the actual presence of an event taking place (exemplified by the occurrence of the Flash Crash); performative analysis as providing (making visible) “visual collateral” of a “re-animation” of the original obscured presence after the fact; and beyond market activity per se, and thus also beyond MacKenzie’s use of the term, counterperformativity as the effect of a renegade act disclosing material otherwise under non-disclosure as a consequence of “capitulation.”The performative setting of Nanex’s analysis might have influenced its outcome counterperformatively in the sense that analysis constructs findings and solutions.

small

align-left

align-right

delete

We can now outline a sharper distinction, which will help us to grasp what is at play in the forensic documentation and evaluation apparatus. Artificial sense organs reach into micro-time by increasing the resolution bandwidth in order to revisit the otherwise insensible “scene of the crime.” The analysis is thus an intricate and extensive cybernetic undertaking characterized by a process of re-mapping, re-modeling, re-visioning, and re-narrating a specific past that happened at near-light speed—a performance ex post that was the occurrence of a future event. As this approach re-enacts the performance of the event, the methodology can be specified as a reperformance. The technological, calculative aspect of sifting data to come up with evidence – enacting the reperformance – becomes explicit in the sheer enormity of the material Nanex examined:

May 6th had approximately 7.6 billion […] records. We generated over 4,500 datasets and over 1,200 charts before uncovering what we believe precipitated the swift 600 point drop […]. In generating these data sets we have also developed several proprietary applications that identify the conditions described in real time or for historical analysis.Data quoted from Nanex data feed information (http://www.nanex.net/20100506/FlashCrashAnalysis_About.html).

Only rigorous research into the deeper, imperceptible strata of microscopic time reveals the actual material matrix. Such an excavation elucidates an inversion of time from Chronos to Kairos – from a chronological interpretation (replay) of pricing to one of intense event time (real time). Methodologically, it inverts the relation between time and space: while the common notion of archaeology entails entering into concrete and thick space cautiously (as when employing technologies of surveying, probing, and classifying), in order to extract the material witness (a truth) of a former era, an archaeology of finance is a forensics of the performance of the future. It probes into the imperceptible materiality of time becoming. It detects patterns and recovers artifacts whose existence are derived from financial models and built on technologies of miniaturization, automation, and infrastructure aligned with the politics of securing, excluding, and enclosing. Research is applied on a field of “making happen” in which “the concept of performativity has lead to the replacement of the concept of truth (or non-truth) by that of success or failure.”Callon, What does it mean to say that economics is performative?, p. 13. This time around, however, it was not an economic modeling of volatility (i.e. hedging risk) but latency and order-book penetration (i.e. the technologically induced pursuit of riskless profits).

A NOISE WITHOUT SIGNAL: INFORMATION ASYMMETRY

Noise crashes within as well as without. —N. Katherine HaylesN. Katherine Hayles, How We Became Posthuman: Virtual Bodies in Cyberspace, Literature, and Informatics. Chicago, IL: The University of Chicago Press, 1999, p. 291.

The Flash Crash narrative unfolds in the extended realm of trading bandwidth and the reduction of profit margins in which a technopolitical regime of success/failure becomes apparent via exclusion/inclusion. It prioritizes the algorithmic aesthesis of an elite of HFT traders.The conviction of BATS Global Markets by the SEC on the grounds of a preference of a group of influential HFTs – who, as Bodek elucidates, are often shareholders of the exchanges – proves this. This information asymmetry was due to specific advantageous order types. Manoj Narang, a former champion of HFT, viewed this as part of the competitive ecology of HFT that is synonymous with other technological applications:

[…] many micro-industries experience an initial phase of immense profitability, which in turn attracts a great many new participants. These new participants drive up competition, which in turn causes profit margins to diminish. Eventually, so many participants are competing that margins can turn negative.Rishi K. Narang (with contributions by Manoj Narang), The Truth about High-Frequency Trading. Hoboken, NJ: Wiley, 2014, pp. 18‒19.

Bodek, however, whose HFT hedge fund went bankrupt due to “order flow information asymmetries,” gives a darker view of the “noisy” ecology of HFT:

There’s basically interaction in the market. This firm knows how this works here, they know this practice works over there, and they’re able to get to 15% or 20% of the market because they know that – and that’s the only reason they can get there […] you have this efficient market and certain regulators say it’s great, it’s only a penny wide, the customer gets the best price. And now I’m telling you, you can have a firm with 20% of the market and the rules change a little bit and some transparency happens and they collapse to 3%. So, what’s your takeaway? What’s my epiphany? I basically believe that individuals running large trading companies, cannot actually tolerate a zero profit margin environment. We will find ways around that situation. We will cheat. We will manufacture situations. We will undermine infrastructure.Haim Bodek in Gerald Nestler, CONTINGENT ETHICS: Portrait of a Philosphies series II, 2014, single channel video. [0:20:24] There seems to be a relation between zero spread and zero marginal utility and the corporate schemes of nevertheless extracting profits by manufacturing monopolies.

Below the radar of state agencies established to regulate market activity, corporate self-interest created an even deeper level of incorporation programmed into automated trading as the “genetic” code of a new breed of agency in the market system. Mathematical models and algorithms revolutionized the logistic infrastructure of exchanges and displaced the trading pit and its market makers (human traders known as “specialists” or “locals”) in favor of faster execution rates and smaller spreads.Donald MacKenzie and Juan Pablo Pardo-Guerra argue that “[t]wenty years ago, share trading in the US was still almost entirely human-mediated and mostly took place in just two marketplaces: NYSE [New York Stock Exchange} and NASDAQ [National Association of Securities Dealers Automated Quotations]. Now, there are thirteen exchanges and more than fifty other trading venues. Only a very small minority of deals are now consummated by human beings: the heart of trading is tens of thousands of computer servers, in often huge datacenters linked by fiber-optic cables carrying millions of messages a second.” See MacKenzie and Pardo-Guerra, “Insurgent capitalism: Island, bricolage and the re-making of finance,” Economy and Society, vol. 43, no. 2 (2014), pp. 153‒82. Algorithmic traders instituted an arena around and between matching engines where they intervene without human intermediaries. Inigo Wilkins and Bogdan Dragos argue that “algorithms are no longer tools, but they are active in analyzing economic data, translating it into relevant information and producing trading orders.”Inigo Wilkins and Bogdan Dragos, “Destructive Destruction? An Ecological Study of High Frequency Trading,” Mute, January 22, 2013 (http://www.metamute.org/editorial/articles/destructive-destruction-ecological-study-high-frequency-trading#). J. Doyne Farmer, a former financial engineer and co-director of the program on complexity economics at Oxford’s Institute for New Economic Thinking, notes, “under price-time priority auction there is a huge advantage to speed.”J. Doyne Farmer, “The impact of computer based training on systemic risk,” Paper presented at the London School of Economics, London, January 11, 2013. And as exchange places are also profit-seeking corporations, the competition expands to the market system in its entirety. Exchanges are not a sort of public places in which capitalist competitors meet; they are players with their own business interests and offer structural as well as financial incentives to increase trading volume, to give but one example.

What emerged is a hypercompetitive environment that tweaks market system rules and infrastructures. It “skews” the smile problem from the surface of derivatives-pricing to the immediacy of latency volatility – a term I propose for the inconsistencies (rather than uncertainties) in a hypercompetitive trading environment, in which developers have replaced quants as the leaders of innovation. Even if there is an absolute limit to speed, the operable spaces of time between human perception and algorithmic reaction time are cosmic, to say the least. A divide of response time has opened up, the gaping but invisible abyss of latency: a new class of resolution enclosures and scales – and hence knowledge – has found the means to effectively hide its machinations from less immediate competitors. If derivative finance and its abstract models were (and still are) a mystery to the public at large, automated trading has escalated finance beyond all recognition. Here, Gottfried Wilhelm Leibniz’s notion of apperception ceases to be a conception of conscious experience emerging from small, unconscious perceptions. The myriads of mathematically constructed small perceptions (of which these camera-engines are not at all “unconscious”) define a virtual field of machine apperception where those who do not command the latest cyborg infrastructure are captured or blocked. Information asymmetries gain traction on the level of systemic visibility. The financial market architecture with its proprietary logistics is a black box not only with regard to the parameters of official inquest, but also in terms of knowability in general (and beyond algorithmic trading proper).

What the high-frequency black box emits is not information but noise. Such technowledge (a term I use to distinguish bot-coded acquisition of knowledge) exerts influence not only on the industry, but of necessity also incapacitates the public forum as a whole. Quantitative speech translates into intense algorithmic violence, invisible and insensible, built on performances that are real but unrecognizable (fictitious capital in the sense of applying tricks and asymmetries but not in the sense of it being insubstantial). Noise exceeds the category of information theory. It is the asymmetric other that is not detected by the majority of market participants because it is not a signal in the sense of communication. In the ever-denser, hypercompetitive environment of narrowing spreads, only the ruthless survive. Noise is a guerilla tactics that stops at nothing in its ‘“pursuit” for profit.Haim Bodek’s whistle-blowing proved that also market centers are partners in crime, e.g. see Bradley Hope, “BATS to Pay $14 Million to Settle Direct Edge Order-Type Case: SEC Fine Is a Record Amount Against a Stock Exchange,” The Wall Street Journal, January 12, 2015 (http://www.wsj.com/articles/direct-edge-exchanges-to-pay-14-million-penalty-over-order-type-descriptions-1421082603). It is not simply a tool; it is a weapon of counterinformation that injures without inflicting the feeling of pain directly; a powerful and disruptive “rhythual,” to add the layer of the ‘immediated’ frequency of algorithmic speech to Judith Butler’s performative “ritual”:

The performative needs to be rethought not only as an act that an official language-user wields in order to implement already authorized effects, but precisely as social rhythual [original: ritual], as one of the very ‘modalities of practices [that] are powerful and hard to resist precisely because they are silent and insidious, insistent and insinuating’: When we say that an insult strikes like a blow, we imply that our bodies are injured by such speech.Judith Butler, Excitable Speech: A Politics of the Performative. London and New York: Routledge, 1997, p. 159.

The most cunning insults are indirect. Fischer Black, in his seminal text succinctly entitled Noise, holds that “noise is information that hasn’t arrived yet.”Fischer Black, “Noise,” Journal of Finance, vol. 41, no. 3 (1986), pp. 529‒43, here p. 529. Black counts five models of noise, the one quoted here relates to business cycles and unemployment and as such, we imply, to the wider economy and to social consequences. When we accept, as evidence has shown, that low latency trading is defined by speed advantage, we must ascertain a bifurcation that goes far beyond competitive advantage in Hayekian information markets.See also note 12. Those who do not command the automated rhythual of micro-time face noise as the “silent and insidious” other of information; they cannot perform equally and thus cannot partake in “that reiterative power of [market] discourse to produce the phenomena that it regulates and constrains,”Judith Butler, Bodies that Matter: On the Discursive Limits of Sex. New York: Routledge, 1993, p. xii, accessed online (https://en.wikipedia.org/wiki/Performativity). to adopt Butler’s linguistic reading of performativity to the fitting speculative speech of financial markets.I should note here that adopting Butler’s work to finance is not a novel approach. Arjun Appadurai writes in Banking on Words, that “Judith Butler’s work introduced the idea of what I now refer to as retro-performativity, which allows us to see that ritual can be regarded as a framework for the co-staging of uncertainty and certainty in social life.” See Appadurai, Banking on Words: The Failure of Language in the Age of Derivative Finance. Chicago, IL: University of Chicago Press, 2016, p. 111. We might say that insult as information asymmetry turns into the violence of noise asymmetry and its fictitious claims – a limitation of visibility that forms the space in which the reiteration of algo speech becomes the dominant (cipher) language; an avalanching of “volatility created by circulatory forces so as to preserve and restore [their] liquidity.”Edward Li Puma, “Ritual in Financial Life,” in Benjamin Lee and Randy Martin (eds), Derivatives and the Wealth of Societies. Chicago, IL, and London: The University of Chicago Press, 2016, p. 51 (term “their” and emphasis by the author).

small

align-left

align-right

delete

FINANCE AND ITS MODE OF PRODUCTION

Only money is free from any quality and exclusively determined by quantity. —Georg SimmelGeorg Simmel, The Philosophy of Money. London and New York: Routledge, 3rd edition, 2004, p. 281.

While in the early 1990s, quants were still dependent on human runners and traders on the trading floors in Chicago and other major market centers (with the exception of the NASDAQ), artificial (neural) networks and automation of order transaction, order flow, and the price engine took another ten years and more to be implemented fully. The electronic global data flow represented by the Bloomberg terminal as a non-human extension of the “individual” traders on the trading floor, forged a cyborg dividuality in which every dividual blends into and is bound to the global information and pricing engine.As regards capitalism, one might think of Gilles Deleuze and Félix Guattari’s notions of “body without organs” and “organs without body” in the sense that the corporation could be interpreted as the ‘legal individual' from whose incorporated organism dividuals derive. See Gerald Nestler, “The Non-Space of Money, or, the Pseudo-Common Oracle of Risk Production,” in Paratactic Commons: amber’12 art and Technology Festival Catalog (December 2013), pp. 150‒54, also online (https://issuu.com/ekmelertan/docs/amber12/1). Uncertainty is turned into a global negotiation on volatility (risk), calibrated dividualy and externalized individually in the case of underperformance. In his thesis on proprietary trading, Robert Wosnitzer argues, “the subjectivity of the trader is mutually co-constitutive with the practice of prop trading, where a dividual subject emerges that has mastered, for better or worse, the social and economic value of a world in which the derivative rules.”Robert Wosnitzer, “Desk, Firm, God, Country: Proprietary Trading And The Speculative Ethos Of Financialism,” unpublished PhD thesis New York University, 2014, p. 171; publication forthcoming. For Arjun Appadurai this derivative logic exceeds beyond the world of trading:

Contemporary finance lies at the heart of […] dividualizing techniques, because it relies on a management and exploitation of risks that are not the primary risks of ordinary individuals in an uncertain world but the derivative or secondary risks that can be designed in the aggregation and recombination of large masses of dividualized behaviors and attributes.Arjun Appadurai, “The Wealth of Dividuals,” in: Benjamin Lee and Randy Martin (eds), Derivatives and the Wealth of Societies. Chicago, IL, and London: University of Chicago Press, 2016, p. 25.

In the course of the production of these claims, Austin’s performativity shifted to Callon’s conception and MacKenzie’s “counterperformativity” (both underscore the impact of economics on finance) and eventually turned into a non-individual ontology of immanence in which technological risk assemblages composed of human and bot actors have become the productive force of complex and contingent open-heart operations on a future in real time. The operational division between uncertainty and risk that Frank Knight introduced in 1921Frank H. Knight, Risk, Uncertainty, and Profit. Boston, MA: Hart, Schaffner & Marx, 1921. and which underlies derivative finance has found its corresponding technologies with the scientification of finance. I abstract this shift by arguing that the mode of production of finance is the production of riskThis is a theoretical concept of the function of financial markets, and not a trivial allegation against the speculative rage of markets and their catastrophic consequences. – the material production and exploitation of quantifiable futures (options) by trading volatility (on volatility and so forth) in a sea of uncertaintyWhile Esposito argues that “uncertainty […] is the engine and stimulus of economic activity, allowing for the development of creativity and the generation of novelties” (Elena Esposito, „The structures of uncertainty: performativity and unpredictability in economic operations,” Economy and Society, 42:1, p. 120), accepted opinion in the social studies of finance holds that markets block out uncertainty in favor of risk. – a state of affairs that goes beyond neoliberalism and financialization and that I propose to term the derivative condition of social relations. Every option is a virtual, quasi-material trajectory into the future and in tight connection with the myriads of other options traded. Deleuze writes:

The only danger in all this is that the virtual could be confused with the possible. The possible is opposed to the real; the process undergone by the possible is therefore a ‘realization’. By contrast, the virtual is not opposed to the real; it possesses a full reality by itself. The process it undergoes is that of actualization.Gilles Deleuze, Difference and Repetition. New York: Columbia University Press, 1994, p. 211.

The recalibration process of dynamic hedging and implied volatility is a “thick narrative” of the Deleuzian virtual – of virtual pricing actualized at every moment (markets trade and no possibility exists). It is a virtual universe that resolves the future by the actualization of price quasi in parallel to real events. As the production of risk is finance’s mode of production – processed by a multitude of complex price layers circulating and recalibrated at any given moment – to say “parallel” is not to say that these worlds do not meet. Quite to the contrary, by blocking out uncertainty and elaborating massive systems that calculate and exploit risk (options), this mode of production is an attractor for reality to emerge within its volatile “gravitational” field. In the words of Elie Ayache, the market is “the technology of the future.”Elie Ayache, The Medium of Contingency. An Inverse View of the Market, Basingstoke: Palgrave Macmillan, 2015, p. 52.

Today, the technology of the future is enshrined in the black box; resolution – both as technology and solution – is proprietary without exception. Externalities do not only appear as the “past” of industrial activity (as its consequence), they affect the future. Consequence trails ahead, as finance – one should rather say the finance-state complex as the result of the 2008 financial crisis – models the world along the lines of probability regimes (this is not confined to markets, as e.g. big data proves). While future profits are being reaped, future losses are socialized (austerity politics, for one, are schemes on the future) – a counterperformativity not of the market but the world. The absolute distance between now and the future is approximated for those who can attach to the future as an intensive space of immediate visibilityRoffe: “absolute surfaces are intensive surfaces.” in Abstract Market Theory, p. 70. – mainly by way of leveraging resolution technowledge; it is inaccessible for those whose obligations are defined by debt as a bond to the past.The space for this article does not allow a discussion of leverage and debt in the social order. Elsewhere I make use of this divide to offer a reading of information capitalism’s social class relations, in which what I call the leverage class relishes in good prospects, and the different tiers of the debt-classes are tied to past obligations and illiquid presents, which annihilate the possibilities of the future.

small

align-left

align-right

delete

EFFICIENT MARKET POST-UTOPIA

Performativity is not about creating but about making happen. —Michel CallonMichel Callon, CSI Working Papers Series, 2006, p. 22.

N. Katherine Hayles reminds us “for information to exist, it must always be instantiated in a medium.”Hayles, How we Became Posthuman, p. 13. Bots act (but not always interact) on the infrastructure. To paraphrase Marshall McLuhan, they “massage”“Massaging” is used here in a playful way similar to Marshall McLuhan’s. His dictum, “The medium is the message,” in Understanding Media (1964), in which the medium shapes “the scale and form of human association and action,” here, is extended to the algorithmic realm and the effects of bots on both the individual and political body. Space does not allow engaging more deeply with McLuhan’s media theory and its relevance for algorithmic media. See McLuhan, Understanding Media: The Extensions of Man. New York: McGraw-Hill, 1964. an electronic “body” whose environment (market) they reconfigure into an algorithmic space. At the center of HFT are developers that speed up transactions to the level of microseconds. Profit is not primarily being sought by dynamic hedging in an uncertain environment. HFT is less engaged with implied volatility and the pricing of derivatives. Hence, quants – not long ago the masters of this universe – are at the threshold of being replaced by big data systems.See, for example, Nathaniel Popper, “The Robots Are Coming for Wall Street,” The New York Times Magazine, February 26, 2016 (http://www.nytimes.com/2016/02/28/magazine/the-robots-are-coming-for-wall-street.html). In this technosphere, the “core” of the market – the order book – is being exploited directly and with high volume in the attempt to amass small but (deemed relatively) riskless profits by reading, interfering, and controlling the signals sent by the matching engine. The production of risk turns into an operational hazard of optimizing infrastructure, hardware, code, and market infrastructure (including exchange places and order types) rather than a mathematically scrutinized pocket of uncertainty. And while the complex algorithms of derivative trading serve the calculation and recalibration of all the prices in the derivative universe, HFT algos need to be simple in order to facilitate low latency interaction. Massive amounts of data are analyzed, but the trading logistics is streamlined to happen in a flash.

As regards the technological incorporation of financial markets today and their infrastructure, the principle resolution threshold is the visibility of the order book – the information is (in the textbook ideal) visible to all market participants and can be acted upon instantly. The Regulation National Market System (Reg NMS), for example, was enacted to establish such a market ideal, but ‘instantly’ exploited by execution rates faster then price consolidation.

The introduction of Regulation NMS in 2007 triggered the dramatic surge of HFT volume in US equity markets. Not well understood at the time was that for-profit electronic exchanges had artificially spurred this volume growth, catering to the ‘the new market makers’ by providing HFTs specialized features and discriminatory advantages that dovetailed with HFT strategies. Indeed, by circumventing the purpose and intent of Regulation NMS through a myriad of legal exceptions and clever regulatory workarounds, electronic exchanges have assisted HFTs in exploiting market fragmentation for mutual gain at the expense of institutional investors.Haim Bodek in his talk on the topic of “The Problem of HFT” at the Denver Security Trader Association ‒ 43rd Annual Convention, July 12, 2013 (http://haimbodek.com/past_events.html).

The crucial term for real-time action is “instant,” as it opens up to the whole gamut of technowledge that constantly redefines latency and speed horizons – and therefore the increment of an actionable instant as well as the constriction of immediate visibility to resolution machines. As the outspoken HFT critic Bodek ascribes the “cannibalistic” acceleration to competitive advantage:

Since 2007, we saw compression in the algorithm trading space where the profit margins approached near zero. And I am part of that problem. I ran my firm specifically to tighten up markets. We sometimes call that the race to the bottom in the business. […] What is the activity that’s driving that race to the bottom? You say, ‘If I can make a near-risk-free fraction of a cent and even if the whole day would have demanded a little bit more, I’m happy to do that now even if we barely make a profit because I’m basically taking away the opportunity for someone else to make a profit.’ […] The strategy, which many of the algorithm trading firms did, was basically market share and just bring it to a place where our competition couldn’t handle it.Haim Bodek, CONTINGENT ETHICS: Portrait of a Philosphies series II, 2014, single channel video, [0:14:20]. (https://vimeo.com/channels/aor)

As the financial engineer, philosopher, and automation critic Elie Ayache points out, however, the scientific paradigm behind quantitative trading and automation hasn’t changed – and thus implicitly refutes Rishi K. Narang’s assertion that “quantitative trading can be defined as the systematic implementation of trading strategies that human beings create through rigorous research.”Rishi K. Narang, Inside the Black Box: The Simple Truth About Quantitative Trading. Hoboken, NJ: Wiley, 2015, p. xi. To Ayache, automated trading is not a new scientific method (an achievement he reserves for the volatility smile problem), but a new wave of exploitation within the probability paradigm and its futile scales:

The market is the only place where the qualitative absolute event, the one that is irreducible to measure and scale and probability, finds quantitative expression, in a material medium borne by numbers, or rather prices. The market is quantitative history. One should keep in mind this contradiction in terms: one should remain aware that the historical event is incalculable and unquantifiable because it precedes any scale; and then understand the extraordinary nature of price (and of its medium: the market) as the quantification of that unquantifiability. This is why the market is truly the technology of the future. You have to realize that price is not a number. Quantifying the event (translating it into numbers) is impossible; yet the market is such a translation, precisely in so far as it takes place outside of possibility. “Quantitative history” does not mean that the event is being forced into the mold of numbers. Rather, a quantity, a number of an extraordinary nature, has been found such that history can be quantified.Elie Ayache, The Medium of Contingency, 2015, p. 52.

In a nutshell, for Ayache the market is real, whereas probability is not.Ayache’s insistence on finance as a body constituted by human traders in the trading pit evokes N. Katherine Hayles’ question, “If we can capture the Form of ones and zeros in a nonbiological medium – say, on a computer disk – why do we need the body’s superfluous flesh?”. See Hayles, How we Became Posthuman, p. 13. To his mind, the hard problem of the volatility smile has not been resolved, nor has it been tackled in algorithmic trading on the supposition that the (option) pricing technology (BSM reversed) works.

In the classic form of HFT, algorithmic trading accelerates the exploitation of an old paradigmHow this plays out in financial corporations was shown by Karen Ho in: Liquidated: An Ethnography of Wall Street. Durham, NC, and London: Duke University Press, 2009. materially embedded in the computer-powered calculative evaluation of massive data sets. Predication machines attempt to evade their unpredictable contingent event by trading in fractions of a second.Knight Capital Group’s loss is an instance of an unpredictable event within the black box itself. On August 1, 2012, the HFT trader lost over 400 million Dollars in 30 minutes due to a technical error, and the firm had to file for bankruptcy. Nanex, who analyzed their trades, commented, “[T]he glitch led to 4 million extra trades in 550 million shares that would not have existed otherwise.” See (http://www.nanex.net/aqck2/3522.html); and (http://www.bloomberg.com/news/articles/2012-08-02/knight-shows-how-to-lose-440-million-in-30-minutes). They reorganize the market to an extraction of price from big data. This performative paradigm exploits a future it doesn’t know and doesn’t need to know as it meets it immediately. The production of risk, a potentially massive concept for complex societies and their needs and desires, complexifies price without producing a present in which it translates back to value. Rather, it produces massive volatilities in the social realm – resolution dissolves to leveraging power in markets that are deemed to operate in consistence with regulations and laws.

Face to face with an increasingly efficient market, however, even those at the forefront of technological innovation run into massive “resistance” – trading a market without utilizable spread is like hitting a wall at full speed. Market-makers operate on the spread to provide liquidity, hedge their own risk, and make profit, whether they are locals or HFT. But with speed at a technological threshold at which high-end competitors cannot exploit against others (see the Bodek quote above), the utopia of neoclassic economics meets its end of time: the realization of perfect efficiency is at the same time the end of the market. But it is evidentially not the end of trading. As always there are some who think and act outside the box. This time, however, it is not quantitative or technological innovation that allows for the exploitation of profits. Increasingly, competitive advantage is only possible by rigging the market in ways to go far beyond the machinations portrayed by books like Dark Pools or Flash Boys. Abusive and collusive behaviors undermine or circumvent regulation (euphemistically called “regulation arbitrage”), market infrastructure, and order-book microstructure, as whistleblower cases and legal settlements have shown.Besides Bodek’s cases, see also, for example: “Whistleblower award for NYSE fine goes to HFT critic,” MarketWatch, March 1, 2016 (http://www.marketwatch.com/story/whistleblower-award-for-nyse-fine-goes-to-hft-critic-2016-03-01); U.S. Securities and Exchange Commission, “SEC Charges New York-Based High Frequency Trading Firm …,” 2014-229 [online](https://www.sec.gov/News/PressRelease/Detail/PressRelease/1370543184457); and Victor Golovtchenko, “Dark Pools Settlements to Cost Barclays, Credit Suisse Over $150m,” Finance Magnates, January 2, 2016 (http://www.financemagnates.com/forex/regulation/dark-pools-settlements-to-cost-barclays-credit-suisse-over-150-mln/). Heavily invested intellectually in the old paradigm of probability evaluation and profit making running on survival instinct, capitalist hypercompetition has opened a door towards the dark side of the efficient market in which the law, regulation, and exchanges are part of strategic management. Risk is implemented into the very institutions that were established to govern against it.

Revised excerpts from Gerald Nestler, Flash Renegades. From an Aesthetics to a Politics of Resolution. Algorithmic Finance and the 2010 Flash Crash, 2016 (publication in preparation).

Image series: Gerald Nestler, RESOLUTIONIZATIONS. self-organized | self-regulated | mythological, Photographs of the hedge fund Sang Lucci and of high-resolution visualizations of Flash Crashes (courtesy Nanex LLC), 4 prints, approx. 30 x 50 cm, 2015, the artist and Nanex LLC.